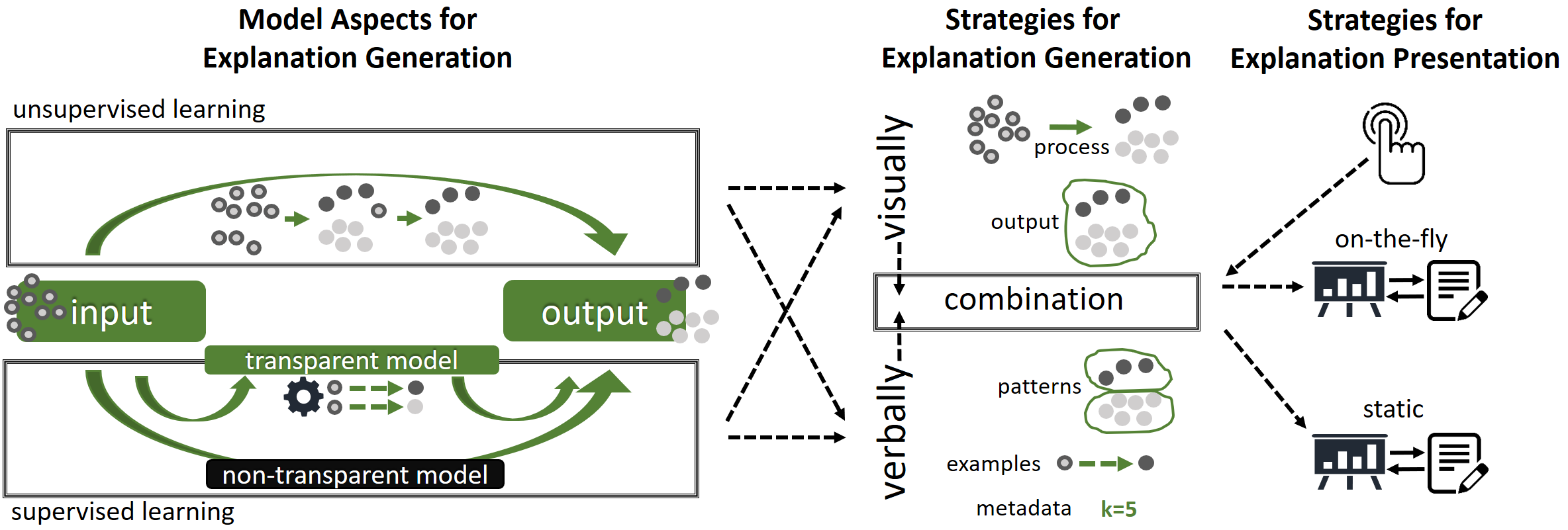

In this position paper, we argue that a combination of visualization and verbalization techniques is beneficial to create broad and versatile insights into the structure and decision-making processes of machine learning models. Explainability of machine learning models is emerging as an important area of research. Hence, insights into the inner workings of a trained model allow users and analysts, alike, to understand the models, develop justifications, and gain trust in the systems they inform. Explanations can be generated through different types of mediums, such as visualizations and verbalizations. Both are powerful tools that enable model interpretability. However, while their combination is arguably more powerful than each medium separately, they are currently applied and researched independently. To support our position that the combination of the two techniques is beneficial to explain machine learning models, we describe the design space of such a combination and discuss arising research questions, gaps, and opportunities.

Rita Sevastjanova, Fabian Beck, Basil Ell, Cagatay Turkay, Rafael Henkin, Miriam Butt, Daniel Keim, Mennatallah El-Assady

VIS Workshop on Visualization for AI Explainability (VISxAI) as part of the IEEE VIS 2018,

2018

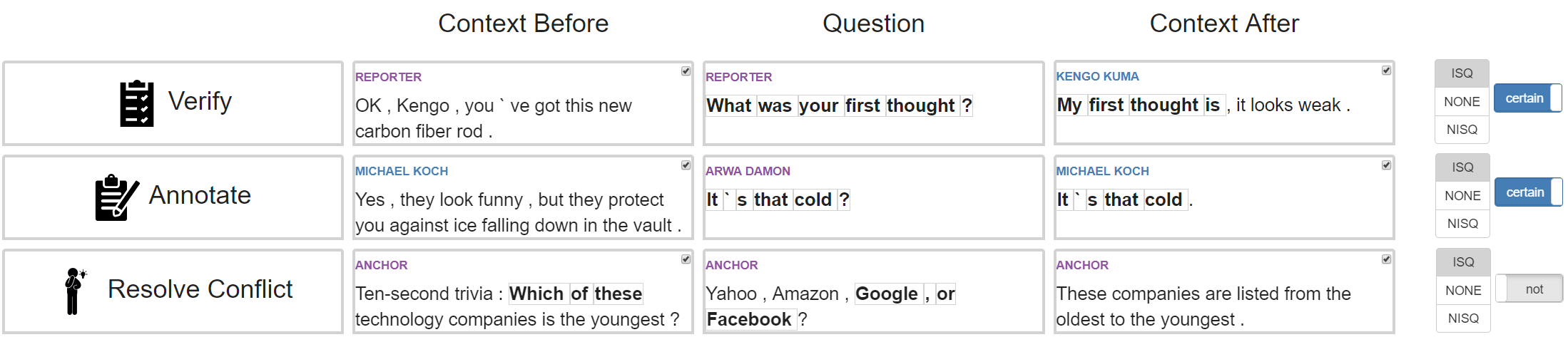

We propose a mixed-initiative active learning system to tackle the challenge of building descriptive models for under-studied linguistic phenomena. Our particular use case is the linguistic analysis of question types, in particular in understanding what characterizes information-seeking vs. non-information-seeking questions (i.e., whether the speaker wants to elicit an answer from the hearer or not) and how automated methods can assist with the linguistic analysis. Our approach is motivated by the need for an effective and efficient human-in-the-loop process in natural language processing that relies on example-based learning and provides immediate feedback to the user. In addition to the concrete implementation of a question classification system, we describe general paradigms of explainable mixed-initiative learning, allowing for the user to access the patterns identified automatically by the system, rather than being confronted by a machine learning black box. Our user study demonstrates the capability of our system in providing deep linguistic insight into this particular analysis problem. The results of our evaluation are competitive with the current state-of-the-art.

Rita Sevastjanova, Mennatallah El-Assady, Annette Hautli-Janisz, Aikaterini-Lida Kalouli, Rebecca Kehlbeck, Oliver Deussen, Daniel Keim, Miriam Butt

VIS Workshop on Data Systems for Interactive Analysis (DSIA) as part of the IEEE VIS 2018,

2018